Ultimate Guide to Software Testing: Definitions, Types, Testing Methods, Applications, and Essential Tools for Success

Software testing is crucial for delivering high-quality applications. It involves a meticulous process of identifying and eliminating bugs through various testing methods, ensuring the software functions smoothly for users. A well-defined testing strategy acts as a roadmap, outlining the testing approach based on factors like application complexity and project timelines. This strategy translates into a detailed test plan, specifying the test cases, tools, and success criteria.

Effective testing execution relies on strategic resource allocation. A skilled testing team, specialized testing tools, and a dedicated testing environment are all vital components. By adhering to a well-defined plan and strategically allocating resources, software testing ensures high-quality applications that meet user expectations. Stay tuned as we delve deeper into the nuances of different testing types, essential tools, and critical roles that contribute to successful software testing.

What Is Software Testing?

Software testing is a crucial process that validates a software application to ensure it is free from bugs and issues before its release. This practice verifies that the software performs and functions as intended, delivering optimal performance and user satisfaction. Testing can be conducted manually or through automated test scripts, guaranteeing that the software product is functional, user-friendly, reliable, and secure. By rigorously testing the application, developers can identify and address potential problems early, ensuring a high-quality final product.

What Is the History of Software Testing?

The history of software testing has evolved significantly since its humble beginnings alongside the development of early computers. Here's a breakdown of its historical journey: early era, standardization era, modern era, and the emerging trends.

- Early Era (1950s - 1970s):

- Defect Investigation vs. Functionality Verification: This era is the distinction between debugging (fixing errors) and testing (verifying functionality) emerged during this period.

- Demonstration-oriented: In this era, testing focused on ensuring the software met pre-defined requirements. Testers manually verified features, often through demonstrations.

- Scope is Limited: This era is where testing practices were primarily reactive, aiming to identify existing issues rather than proactively preventing them.

- Standardization Era (1980s - 1990s):

- Formalization: This era is the introduction of structured methodologies like black-box and white-box testing that brought a more systematic approach.

- Quality Focus: In this era, the rise of quality standards like ISO 9000 emphasized the importance of formal testing processes for software quality assurance.

- Landscape Shift: This era is where the emergence of personal computers and complex software applications necessitated more rigorous testing strategies.

- Modern Era (2000s - Present):

- Agile Revolution: In this era, agile development methodologies, with their emphasis on iterative development and continuous testing, led to a more integrated testing approach.

- Automation Surge: This era is where automation tools and frameworks gained prominence, enabling faster and more efficient testing cycles.

- Diversification: This era is the diversification of the testing landscape with the rise of mobile apps, cloud computing, and web services, requiring specialized testing techniques.

- Emerging Trends:

- Shift-Left Testing: In this era, testing is being integrated earlier in the development lifecycle to prevent defects from creeping in later stages.

- AI and Machine Learning: This era is where the use of AI and ML in testing is on the rise, facilitating test automation, performance optimization, and anomaly detection.

This concise breakdown provides a historical perspective on software testing, highlighting the evolving practices, methodologies, and future trends in this crucial field.

What Is Software Quality?

Software quality is the measure of a software product’s ability to meet its requirements and satisfy user needs. It encompasses both defect management and quality attributes. High-quality software aligns with stakeholder needs, accurately reflecting their expectations. Defined as the capability to meet stated and implied needs under specified conditions, software quality ensures functionality and usability. Even if software performs correctly according to specifications, it’s not considered high quality if it’s unusable or difficult to maintain. Therefore, true software quality integrates both technical correctness and practical usability, ensuring reliable and user-friendly products.

What Is Defect Management Approach in Software Quality?

Defect Management Approach in software quality involves identifying, documenting, prioritizing, tracking, and resolving defects (bugs or issues) in a product. This process helps maintain and improve the overall quality of the software by ensuring that defects are addressed promptly and effectively throughout the development. It uses tools like defect leakage matrices and control charts to improve the development process.

What Is Quality Attributes Approach in Software Quality?

The Quality Attributes Approach in software quality focuses on measurable and testable properties that meet user needs. These attributes, crucial in software design, enhance user satisfaction and product quality.

Exemplified by standards like ISO/IEC 25010:2011, the Quality Attributes Approach includes characteristics such as functional suitability, reliability, operability, performance efficiency, security, compatibility, maintainability, and transferability.

What Are the Different Software Quality Factors?

When it comes to software quality, several crucial factors play a significant role in determining the overall excellence of a software product. These factors, according to industry experts, encompass reliability, efficiency, usability, maintainability, portability, and functionality.

- Reliability: Users depend on software to perform consistently without errors. Reliability ensures that the software operates as intended under various conditions.

- Efficiency: Efficient software utilizes system resources optimally, ensuring swift performance and minimal resource consumption.

- Usability: User-friendly interfaces and intuitive design enhance usability, enabling users to interact with the software effortlessly.

- Maintainability: Software should be easy to maintain and modify to adapt to changing requirements or address issues efficiently.

- Portability: Portability allows software to run across different platforms and environments seamlessly, maximizing its accessibility and utility.

- Functionality: The core purpose of software, its functionality, must meet user expectations and fulfill specified requirements accurately.

By prioritizing these software quality factors during development, organizations can ensure the delivery of high-quality software that meets user needs effectively, thereby enhancing customer satisfaction and market competitiveness.

Improving software quality requires effective testing. Explore our detailed guide on the Test Maturity Model (TMM) to understand how it evaluates key quality dimensions like functionality, reliability, security, and performance. By leveraging TMM, organizations can enhance testing methodologies and overall software quality. Discover more about optimizing testing strategies in our resource on the Test Maturity Model.

What Are the Goals of Software Testing?

The goals of software testing are to ensure software quality, improve usability, enhance reliability, boost performance, guarantee security, and compliance with requirements.

- Ensure Software Quality: The primary goal of software testing is to identify defects, errors, and bugs in the software before it's released to users. This helps to ensure that the software meets the specified requirements and functions as intended.

- Improve Usability: Usability testing's goal is to assess how users interact with the software. It helps to identify areas where the software is difficult to use and make recommendations for improvement.

- Enhance Reliability: A key goal of software testing is to identify and fix reliability issues that could cause the software to crash, freeze, or behave unexpectedly.

- Boost Performance:Performance testing's goal is to measure the speed, stability, and scalability of the software. This information can be used to identify bottlenecks and optimize the software for performance.

- Guarantee Security: Security testing's goal is to identify vulnerabilities in the software that could be exploited by attackers. This helps to protect the software and its users from security threats.

- Compliance With Requirements: Software testing serves the critical goal of ensuring that the software complies with all applicable laws, regulations, and industry standards.

What Are the Benefits of Software Testing?

There are 9 benefits of Software Testing, such as earlier defect detection, increased customer satisfaction, cost reduction, improved product quality and reliability, quicker development process, easier addition of new features, enhanced security, easier recovery, and enhanced agility.

- Earlier Defect Detection: A benefit of software testing is finding and fixing defects early in the development process. This is significantly cheaper and easier than after the software is complete. By working alongside developers from the start, testers can identify issues as they arise.

- Increased Customer Satisfaction: A benefit of software testing is delivering a high-quality product that meets user needs. This is essential for customer satisfaction. Software testing helps ensure a defect-free application that functions as intended, leading to happier customers.

- Cost Reduction: A benefit of software testing is cost-effective defect management. Fixing bugs early in development is more economical than fixing them later. Additionally, software testing helps reduce maintenance costs by identifying potential problems before they occur.

- Improved Product Quality and Reliability: A benefit of software testing is verifying software functionality. It ensures the software meets user requirements and performs as expected. This includes compatibility testing to ensure the application works on various devices and operating systems.

- Quicker Development Process: A benefit of software testing is streamlining the development process. By identifying defects early, testers can help developers fix them quickly. This allows for faster delivery of the final product.

- Easier Addition of New Features: A benefit of software testing is maintaining code quality during feature development. Well-written tests make it easier for developers to modify the codebase when adding new features. This reduces the risk of introducing new bugs in the process.

- Enhanced Security: A benefit of software testing is enhancing application security. It includes security testing methods to identify vulnerabilities and potential security breaches. This helps to protect users' data and ensure the overall security of the application.

- Easier Recovery: A benefit of software testing is improving application resilience. Software testing helps assess how quickly an application recovers from failures. Testers can identify scenarios where failures are likely to occur and measure recovery times. This information helps developers improve the application's resilience.

- Enhanced Agility: A benefit of software testing is supporting agile development practices. Agile development methodologies emphasize collaboration, iteration, and continuous improvement. Software testing aligns well with these principles by enabling frequent and early testing, reducing errors and rework.

What Are the Different Types of Software Testing?

There are two distinct types of software testing: manual testing and automation testing. Manual testing relies on human execution of predefined test cases, while automation involves machine-executed scripts for automatic validation. Each method offers unique advantages in ensuring software quality.

- Manual Testing: Manual testing involves testers executing predefined test cases by interacting directly with the software, checking its functions and features. Though time-consuming and prone to human error, manual testing is essential for ensuring software quality. It complements automation testing, which relies on pre-written scripts for validation, speeding up the testing process.

- Automation Testing: Automation testing involves writing test scripts to be executed by a machine, validating software functionality automatically. It ensures faster and reliable testing compared to manual methods, reducing human error. Integral to continuous integration and delivery, it accelerates the QA process, though manual testing remains valuable for exploratory checks.

What Are the Various Approaches to Software Testing?

The various approaches to software testing include White Box Testing, Black Box Testing, and Gray Box Testing. White Box Testing involves examining the source code, Black Box Testing focuses on functionality, and Gray Box Testing combines both approaches for comprehensive testing.

- White Box Testing: White box testing, also called clear or transparent testing, involves testing an application with complete access to source code. It delves into the internal workings of software, uncovering hidden errors and optimizing code. White box testing evaluates security and functionality, requiring understanding of coding structure. It's used for verification at unit, integration, and system levels.

- Black Box Testing: Black box testing assesses software functionality without understanding its internal code structure. Testers focus on input and output, identifying discrepancies in specifications. It's vital for evaluating performance from an end user's perspective. This method ensures software behavior aligns with requirements and is applicable across testing levels.

- Gray Box Testing: Gray box testing combines elements of both black and white box testing. Testers have limited knowledge of the system's internal workings, aiding in effective test case design. This hybrid approach is ideal for identifying structural issues and is valuable in web application testing. It's essential for integration and penetration testing, evaluating system vulnerabilities against malicious attacks.

What Are the Testing Categories?

There are two testing categories: Functional Testing, validating features, and Non-functional Testing, assessing performance and usability for software reliability and user satisfaction.

- Functional Testing: Functional Testing ensures software systems meet specified requirements by validating features, data handling, and user interactions. It focuses on actual system usage and comparing results against predetermined specifications. Employing Black Box Testing techniques, it ensures each function delivers the expected output. Learn more about its significance in software quality assurance.

- Non-functional Testing: Non-functional Testing evaluates software systems based on non-functional parameters like performance and usability. Unlike functional testing, it ensures the system's behavior aligns with non-functional requirements. This type of testing examines aspects not covered in functional tests, ensuring software reliability and user satisfaction.

What Are the Different Levels of Software Testing?

Different levels of software testing ensure comprehensive evaluation, catching bugs early, verifying module interactions, validating the entire system, and ensuring user satisfaction.

- Unit Testing: Unit Testing involves testing individual components or modules of a software application in isolation. This level of testing focuses on verifying the correctness of specific sections of code, typically using automated tests written by developers. It helps in identifying bugs early in the development process, ensuring code reliability and functionality.

- Integration Testing: Integration Testing assesses the interaction between integrated modules or components of a software system. This level of testing ensures that combined parts work together as expected, identifying issues in the interfaces and communication between modules. It helps in detecting problems that may not arise in isolated unit tests.

- System Testing: System Testing evaluates the complete and integrated software system as a whole. This level of testing checks the system's compliance with the specified requirements and overall quality. It covers functional and non-functional testing, ensuring the software behaves correctly in a production-like environment and meets business needs.

- Acceptance Testing: Acceptance Testing is the final level of testing before the software is released to the end user. It verifies that the software meets the acceptance criteria and satisfies the business requirements. This level of testing is usually performed by the end-users or clients to ensure the software is ready for deployment.

What Is a Software Test Model?

A software testing model is a structured approach to planning and executing software testing activities. It defines a framework for designing test cases, selecting test data, and evaluating test results. By following a software testing model, software development teams can ensure that their software is thoroughly tested and meets the specified requirements.

There are several different software testing models, each with its own advantages and disadvantages. The most appropriate model for a particular project will depend on the specific needs of the project, such as the size and complexity of the software, the development methodology being used, and the available resources. Some of the software testing models are Waterfall Model, V-Model, Agile Model, Spiral Model, and Iterative Model.

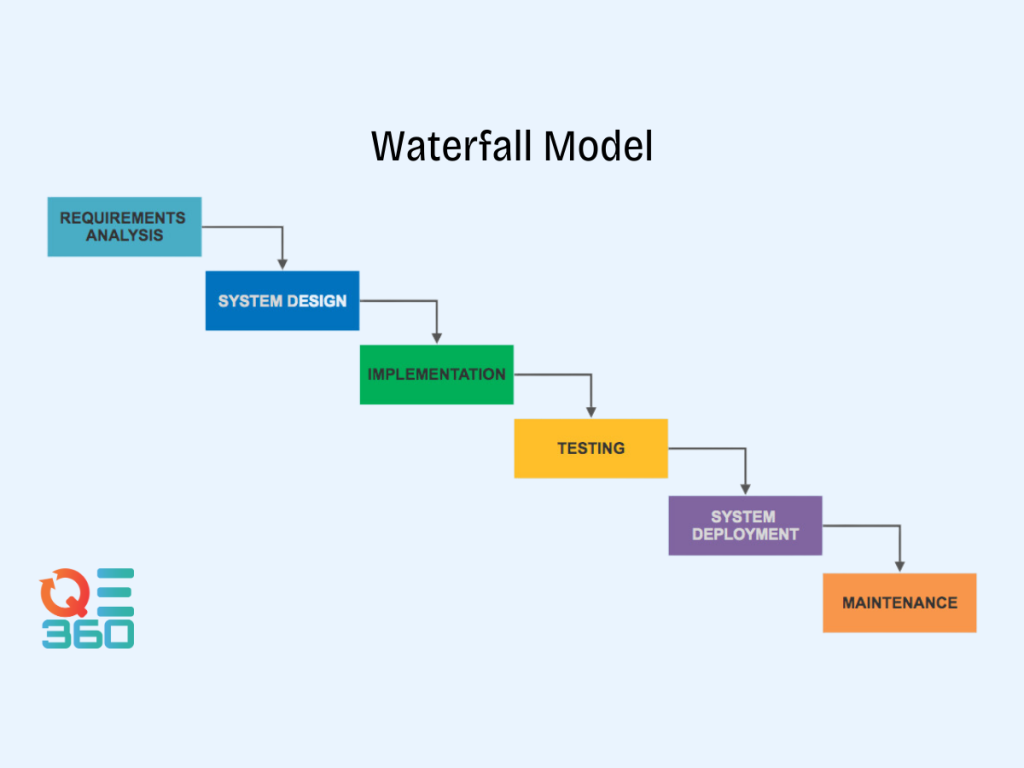

- Waterfall Model:

- The Waterfall Model is a traditional software development approach where the project progresses through linear stages, like requirements gathering, design, development, testing, and deployment. Each stage must be completed before moving on, and relies on the output from the prior phase. This structured approach with specialized tasks in each stage makes it easy to understand, but it's less flexible and iterative, meaning changes later in the process can be complex and expensive.

Waterfall Model Diagram

- The Waterfall Model is a traditional software development approach where the project progresses through linear stages, like requirements gathering, design, development, testing, and deployment. Each stage must be completed before moving on, and relies on the output from the prior phase. This structured approach with specialized tasks in each stage makes it easy to understand, but it's less flexible and iterative, meaning changes later in the process can be complex and expensive.

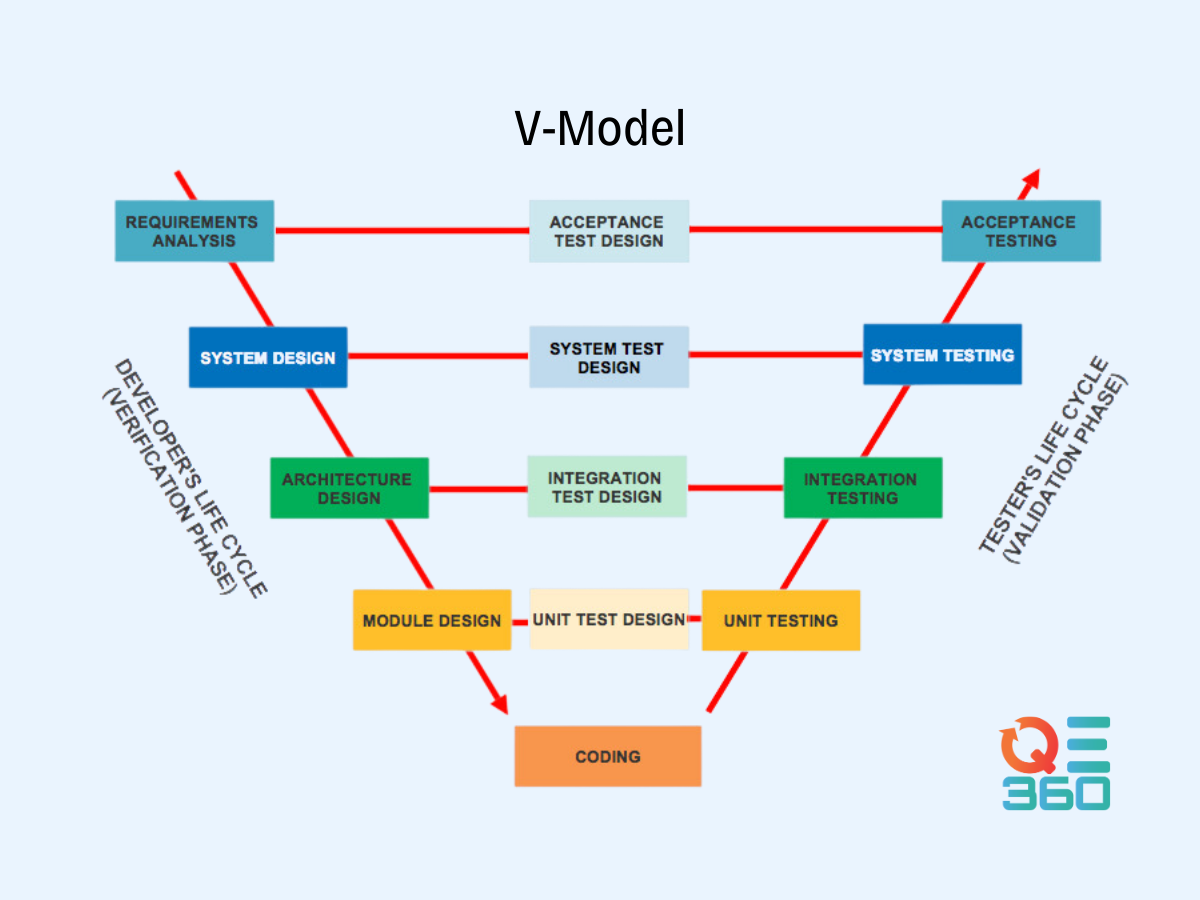

- V-Model:

- The V-model is a software development approach based on the Waterfall Model. It visualizes development phases like requirements and design on one side, mirroring corresponding testing phases (unit, integration) on the other, forming a V-shape. This emphasizes the planned testing activities for each development stage. Like the Waterfall Model, it offers a structured approach but can be inflexible for projects with evolving requirements.

V-Model Flowchart

- The V-model is a software development approach based on the Waterfall Model. It visualizes development phases like requirements and design on one side, mirroring corresponding testing phases (unit, integration) on the other, forming a V-shape. This emphasizes the planned testing activities for each development stage. Like the Waterfall Model, it offers a structured approach but can be inflexible for projects with evolving requirements.

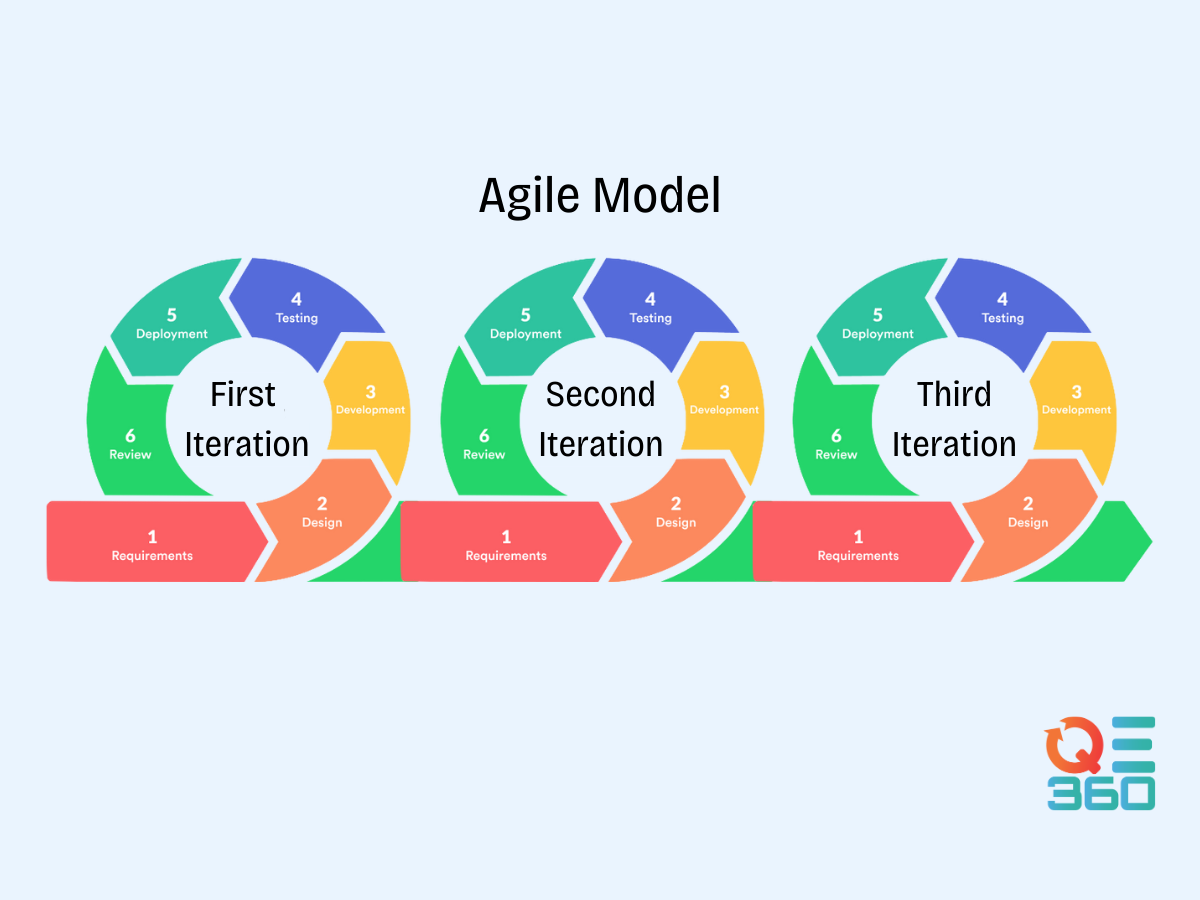

- Agile Model:

- The Agile model, guided by the Agile Manifesto, is an iterative and collaborative approach to software development. It prioritizes close communication (often face-to-face) within self-organizing teams. Unlike traditional models, Agile embraces change and views evolving requirements as an opportunity. Work is broken down into short, time-boxed cycles with frequent deliveries, allowing for continuous testing and adaptation throughout the development process. This flexible and responsive approach makes Agile well-suited for projects with uncertainty and a need for frequent feedback.

Agile Model Flowchart

- The Agile model, guided by the Agile Manifesto, is an iterative and collaborative approach to software development. It prioritizes close communication (often face-to-face) within self-organizing teams. Unlike traditional models, Agile embraces change and views evolving requirements as an opportunity. Work is broken down into short, time-boxed cycles with frequent deliveries, allowing for continuous testing and adaptation throughout the development process. This flexible and responsive approach makes Agile well-suited for projects with uncertainty and a need for frequent feedback.

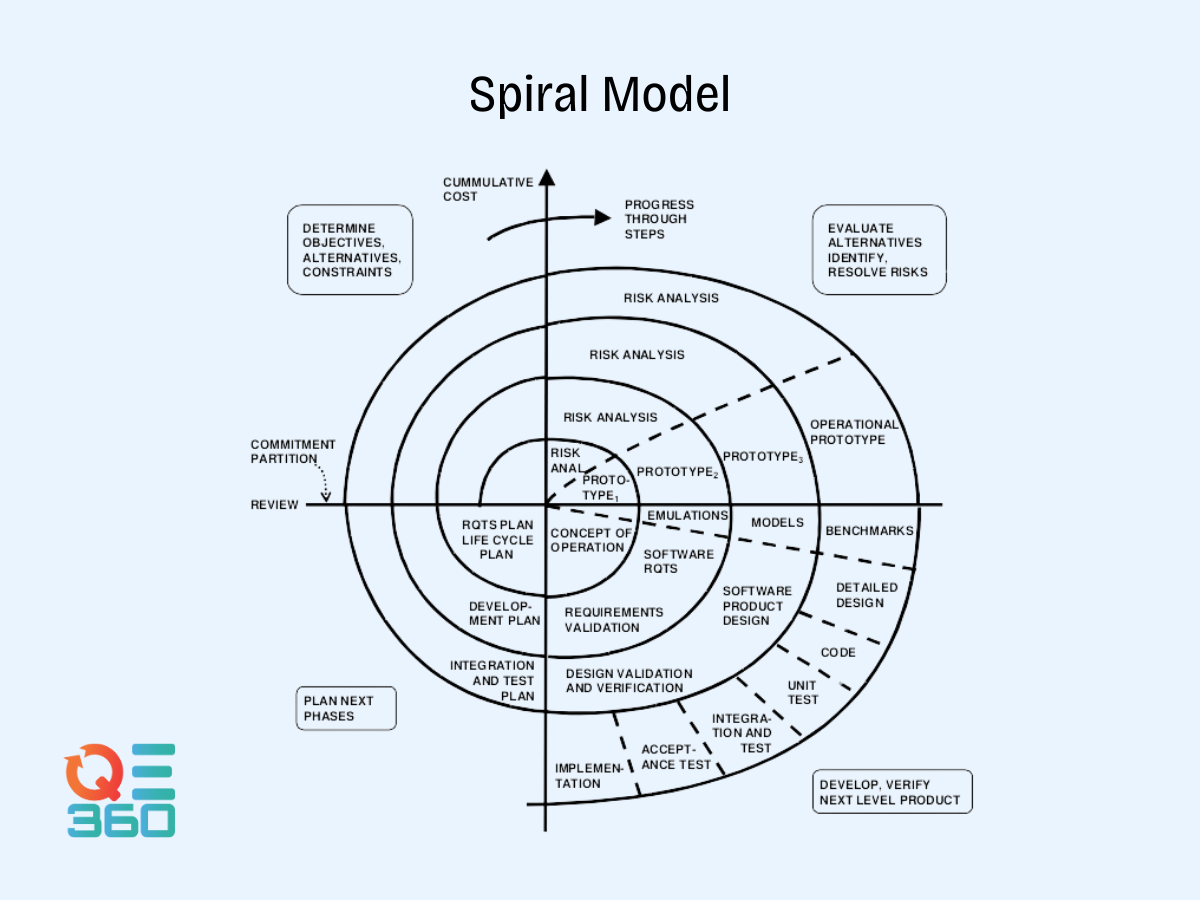

- Spiral Model:

- The Spiral Model, introduced by Barry Boehm, is a risk-focused approach to software development. Unlike the Waterfall or V-model, it's not a linear progression. Instead, it resembles a spiral staircase, where cycles revisit and refine stages based on risk assessments. High-risk areas receive more testing and development iterations, while low-risk areas may proceed faster. This flexibility makes it well-suited for projects with unknowns or evolving requirements.

Spiral Model Diagram

- The Spiral Model, introduced by Barry Boehm, is a risk-focused approach to software development. Unlike the Waterfall or V-model, it's not a linear progression. Instead, it resembles a spiral staircase, where cycles revisit and refine stages based on risk assessments. High-risk areas receive more testing and development iterations, while low-risk areas may proceed faster. This flexibility makes it well-suited for projects with unknowns or evolving requirements.

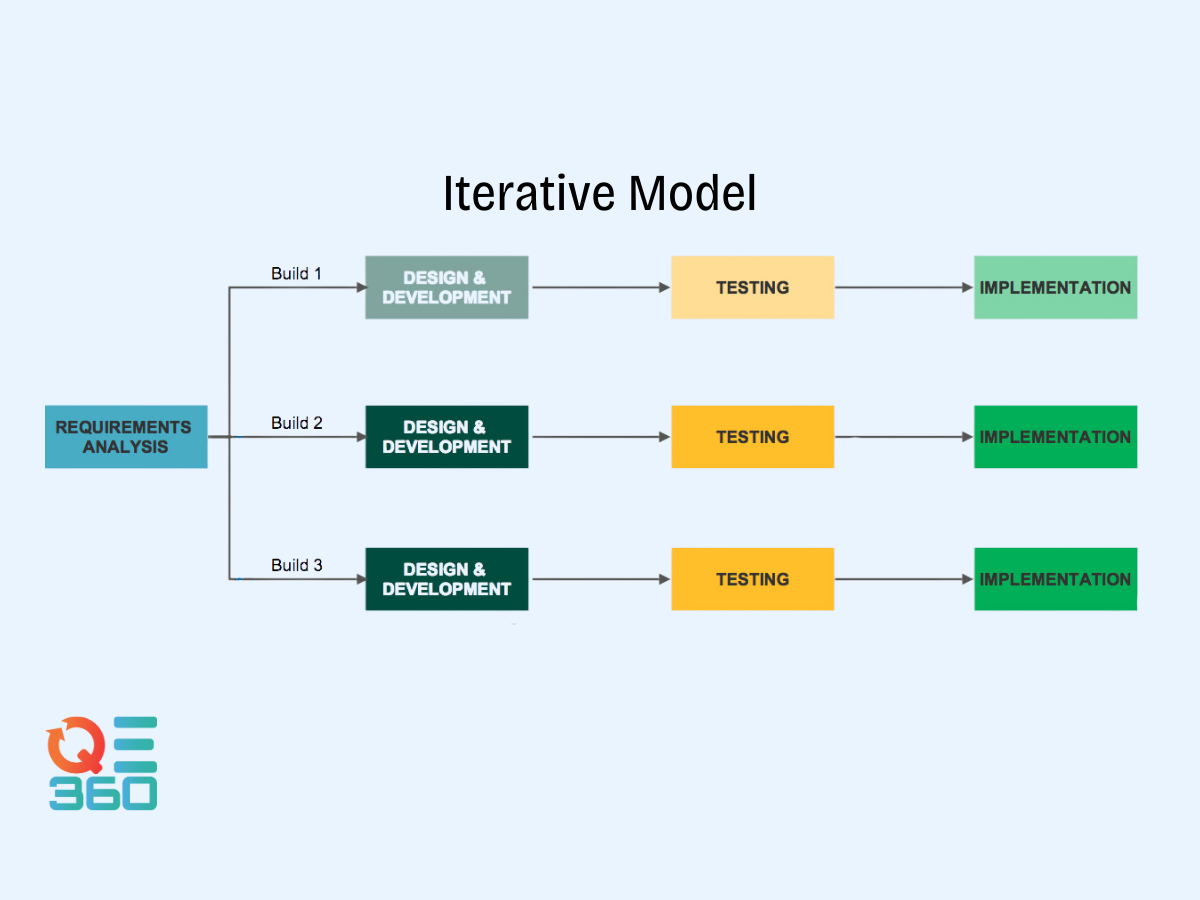

- Iterative Model:

- The iterative model tackles software development in smaller, manageable stages called iterations. Each iteration focuses on a specific set of features and involves activities like planning, design, development, and testing. The iterative model constructs the software incrementally, allowing for early feedback, reduced risk of major issues, and adaptation to changing requirements throughout the process.

Iterative Model Flowchart

- The iterative model tackles software development in smaller, manageable stages called iterations. Each iteration focuses on a specific set of features and involves activities like planning, design, development, and testing. The iterative model constructs the software incrementally, allowing for early feedback, reduced risk of major issues, and adaptation to changing requirements throughout the process.

What Are the Different Phases in the Software Testing Life Cycle?

The software testing life cycle (STLC) is a framework that defines the different stages involved in software testing. Each stage has its own goals and deliverables, and they are typically executed in a sequential order. Following a well-defined STLC ensures a comprehensive and systematic approach to testing, improving the overall quality of the software.

There can be variations in the number and names of the phases included in the STLC depending on the specific methodology used. However, some of the common phases include Requirement Analysis, Test Planning, Test Case Design, Test Environment Setup, Test Execution, and Test Closure:

- Requirement Analysis: In this initial phase, testers carefully examine and understand the software requirements outlined in the system requirements documents (SRDs) and other relevant documents. This includes understanding the functional and non-functional requirements, as well as the intended use cases of the software. A thorough requirement analysis is essential for defining effective testing strategies and designing comprehensive test cases.

- Test Planning: During test planning, testers establish a roadmap for the testing process. This involves activities such as defining the testing scope, identifying the testing tools and resources required, and creating a test schedule. The test plan serves as a blueprint for executing the testing process efficiently and effectively.

- Test Case Design: In this phase, testers design individual test cases that will be used to verify if the software meets the specified requirements. Test cases should cover both positive and negative scenarios, and they should be designed to identify a wide range of potential defects.

- Test Environment Setup: A test environment is a replica of the production environment where the software will be deployed. Setting up a test environment involves installing the software, configuring necessary hardware and software components, and preparing any required test data.

- Test Execution: During test execution, testers meticulously run the designed test cases against the software in the test environment. This involves recording the test results, identifying any defects encountered, and logging them for further analysis.

- Test Closure: After test execution is complete, testers analyze the overall test results, identify trends or patterns in the defects found, and prepare test reports that document the testing activities, findings, and recommendations. Test closure also involves evaluating the effectiveness of the testing process and identifying areas for improvement in future testing cycles.

By following a well-defined testing process with distinct stages, software development teams can systematically identify and address issues, ultimately delivering high-quality and reliable software products.

To gain a deeper understanding of the Software Testing Life Cycle, we recommend consulting our informative article, What is Software Testing Life Cycle?

How To Do Software Testing?

Software testing involves several essential steps, including test plan creation, test case development, test automation, test execution, test result analysis and defect reporting, and test report.

- Test Plan Creation: Begin by defining the scope, resources, and schedule of testing in alignment with application requirements.

- Test Case Development: Craft test scenarios from a user's perspective to ensure the application functions as intended. This includes basic, alternative, and edge-case tests.

- Test Automation: Automate repetitive test cases using scripts to enhance efficiency, particularly for functional tests prone to human error or time-consuming processes.

- Test Execution: Thoroughly validate all application functionalities against requirements, including performance and security tests when necessary.

- Test Result Analysis and Defect Reporting: Comprehensively analyze test outcomes, identify bugs or defects, and report them to developers for resolution.

- Test Report: Document the testing process, results, and identified defects in a report for stakeholders, serving as a communication tool and historical record.

How To Design Software Tests?

Designing software tests involves carefully planning and creating test cases to comprehensively evaluate the software's functionality. By methodically testing various scenarios, software testers can identify and eliminate bugs before the software reaches production.

Techniques for Software Test Design

There are various techniques in designing software tests. Some of the most commonly used techniques include Boundary Value Analysis (BVA), Equivalence Class Partitioning, Decision Table Based Testing, State Transition Testing, Use Case Testing, and Error Guessing.

- Specification-based Design Techniques:

- Boundary Value Analysis (BVA): Boundary value analysis, a black-box testing technique, focuses on inputs at the edges or boundaries of valid ranges. By testing these critical areas (minimum, maximum, just above/below), along with valid inputs within each range, testers ensure the software handles extreme values and transitions correctly, improving software reliability.

- Equivalence Class Partitioning: Equivalence Class Partitioning is a black-box testing technique that divides input data into classes where each class is expected to behave similarly. By designing test cases for each partition, testers achieve efficient test coverage while reducing redundancy. This method helps identify bugs related to invalid inputs and boundary values.

- Decision Table Based Testing: Decision Table Based Testing, a black-box technique, tackles applications with complex rules. It uses a table to map various input combinations to their expected outputs. This systematic approach ensures all possible scenarios are tested, improving test coverage and identifying issues with intricate logic.

- Use Case Testing: Use Case Testing focuses on how users interact with the software. By designing tests around typical user actions (use cases), testers ensure the system functions as expected, meets user needs, and delivers a positive user experience. This approach promotes user-friendly, reliable software aligned with real-world use.

- Model-based / Model Checking Design Techniques:

- State Transition Testing: State transition testing, a black-box technique, ensures software behaves correctly when switching between states (like on/off, locked/unlocked). By identifying system states and their triggers, testers design tests to verify smooth transitions and expose unexpected behavior. This is particularly useful for applications with distinct modes or user flows.

- Structure-based Design Techniques

- Control Flow Testing: Control flow testing, a white-box testing method, analyzes a program's code structure. By examining execution paths, testers design test cases to ensure proper functionality. Often used during unit testing by developers, it helps identify and target potential bugs within the code's logic.

- Data Flow Testing: Data flow testing, a white-box technique, examines how data moves through a program. By analyzing where variables are defined and used, testers design test cases to expose issues with data flow, like uninitialized variables or unexpected modifications. This helps ensure data integrity and prevent logic errors.

- Experience-based Design Techniques

- Error Guessing: Error guessing is a black-box testing technique where testers leverage their experience to predict and uncover bugs. By thinking like a user and considering areas outside formal testing, they identify potential issues that might otherwise be missed, enhancing software quality.

- Fault-based Design Techniques

- Fault-Based Testing: Fault-based testing flips the script. Instead of reacting to bugs, testers predict potential faults (syntax errors, logic issues) and design test cases to expose them. By injecting simulated errors, they proactively identify weaknesses in the code, improving software reliability.

- Taxonomy-Based Testing: Taxonomy-based testing leverages a categorized list of software defects (taxonomy) to guide test case design. By mapping requirements to potential defect categories, testers create targeted tests that identify bugs early in development, improving efficiency and software quality.

List of Test Design Techniques

| Test Basis | Test Design Category | Test Design Technique |

|---|---|---|

| Informal Requirements | Specification-based | Equivalence Partitioning Boundary Value Analysis Decision Table Cause and Effect Graphing Use Case Testing User Story Testing Classification Tree Ad hoc Testing |

| Semiformal or formal requirements | Model-based Model checking | State Transition Testing Syntax Testing |

| Structure (e.g. code) | Structure-based | Control-flow Testing Data-flow Testin Path Testing Condition Testing Mutation Testing |

| Experience | Experience-based | Error Guessing Exploratory Testing Attack Testing Checklists |

| Faults, Failures | Fault-based | Fault-based Testing Taxonomy-based Testing |

Steps Involved in Test Design

Creating a well-designed test plan involves a series of steps: identifying software requirements, analyzing software requirements, crafting test cases, reviewing and approving test cases, executing test cases, evaluating test results, and then reporting defects.

- Identifying Software Requirements: The foundation lies in understanding the software's requirements, encompassing functional requirements, non-functional requirements, and user interface (UI) requirements.

- Software Requirements: After identifying the requirements, a thorough analysis is needed to pinpoint what aspects require testing. This involves scrutinizing the software's inputs, outputs, and functionalities.

- Crafting Test Cases: Based on the requirements analysis, specific, measurable, achievable, relevant, and time-bound (SMART) test cases are created.

- Reviewing and Approving Test Cases: Before deploying the test cases, they undergo review by other testers and stakeholders to ensure clarity and effectiveness.

- Executing Tests Cases: Test cases can be executed manually or through automation tools.

- Evaluating Test Results: The results from the test cases are meticulously evaluated to determine if the software functions as expected.

- Reporting Defects: Any discovered defects are documented and reported to the development team for rectification.

By following these steps, you can establish a comprehensive test design strategy that significantly improves your software's quality.

What Are Test Cases in Software Testing?

Test cases in software testing are fundamental components that outline specific inputs, actions, and expected outcomes to assess the functionality of a software application. They serve as a structured approach to identify software defects and ensure the application performs as intended.

Elements of a Test Case

Writing clear and comprehensive test cases is essential for thorough software testing. A well-defined test case acts as a blueprint, outlining the steps to verify a specific feature and the expected results. Let's break down the key elements that make up a solid test case: Test Case ID, Test Case Description, Test Steps, Expected Results, Pass/Fail Criteria, Pre-Conditions, and Post-Conditions.

- Test Case ID: A unique identifier for the test case.

- Test Case Description: A clear and concise explanation of the functionality being tested.

- Test Steps: A sequential list of actions to be performed during the test.

- Expected Results: The anticipated outcome for each test step.

- Pass/Fail Criteria: The definition of success or failure for the test case.

- Pre-Conditions: Any specific conditions that need to be established before running the test.

- Post-Conditions: The state of the application after the test execution.test.

In software development, test cases act as meticulous inspectors, scrutinizing applications to detect potential issues early on. They are essential for building high-quality software by enabling early defect detection, improved software quality, enhanced user experience, and regression testing. This means identifying bugs early to reduce fixing costs, ensuring the application meets all requirements, verifying it functions as intended for users, and re-running tests to confirm new changes don't introduce problems. By effectively designing and executing test cases, software development teams can deliver high-quality applications that meet user expectations and function reliably.

What Is a Test Charter?

In software testing, a test charter serves as a formal document that outlines the strategy, objectives, and overall scope for a testing project, particularly within the context of exploratory testing. It functions as a roadmap for testers, guiding them throughout the testing process and ensuring their efforts align with the project's goals.

- Purposes of a Test Charter

- Maintaining Focus and Direction: During exploratory testing sessions, testers are encouraged to think creatively and delve into uncharted areas. A well-defined test charter helps them maintain focus on the project's overarching objectives and avoid getting sidetracked by irrelevant details.

- Enhancing Test Coverage: Exploratory testing is a dynamic process that prioritizes discovery over scripted procedures. A well-crafted test charter can guide testers in identifying areas that traditional testing methods might overlook, leading to more comprehensive test coverage.

- Improved Communication and Collaboration: The test charter serves as a central point of communication for the testing team. It fosters collaboration by ensuring everyone is on the same page regarding the testing goals, priorities, and overall approach.

- Components of a Test Charter

- Project Information: This section provides basic details about the project under test, such as the project name, version, and target release date.

- Testing Objectives: The test charter clearly outlines the specific goals that the testing process aims to achieve. These objectives should be measurable and aligned with the overall project requirements.

- Scope of Testing: The scope section defines the boundaries of the testing effort. It specifies which areas of the software will be tested and which areas will be excluded.

- Entry and Exit Criteria: The charter establishes clear criteria for determining when testing can begin (entry criteria) and when it can be concluded (exit criteria). Entry criteria might include the completion of specific development milestones, while exit criteria could be based on achieving a certain level of test coverage or identifying a specific number of critical bugs.

- Resources: This section outlines the resources that will be available to the testing team, such as hardware, software, and access to development environments.

- Roles and Responsibilities: The charter clearly defines the roles and responsibilities of each team member involved in the testing process. This includes testers, developers, and other stakeholders.

- Schedule and Timelines: The charter establishes a timeline for the testing effort, outlining key milestones and deadlines.

What Are the Differences Between a Test Case and a Test Charter?

In software testing, understanding the differences between test cases and test charters is crucial. While test cases focus on verifying specific functionalities, test charters define the scope, objectives, and approach for testing projects. This comparison table elaborates on their purposes, levels of detail, content, creation time, and maintenance responsibilities.

| Feature | Test Case | Test Charter |

|---|---|---|

| Purpose | Verify a specific functionality of an application | Outline the scope, objectives, and approach for testing |

| Level of detail | Low-level, specific | High-level |

| Content | Steps, expected results, pass/fail criteria | Scope, objectives, deliverables, roles, responsibilities |

| Created at | During test design | At the beginning of a testing project |

| Maintained by | Testers | Test Lead or Project Manager |

What Is a Test Condition?

A test condition in software testing is a specific state, scenario, or set of inputs used to evaluate a particular aspect of a software application's behavior. Derived from software requirements, test conditions ensure that all functionalities are thoroughly examined.

- Key Areas of Test Conditions

- Functionality: Assess if the feature performs as designed under specified conditions.

- Usability: Determine if the feature is user-friendly and intuitive under various scenarios.

- Performance: Evaluate the software's speed and resource consumption under given conditions.

- Compatibility: Check if the software operates as expected across different operating systems, browsers, and devices.

- Categories of Test Conditions

- Valid vs. Invalid Conditions: Valid conditions represent expected user inputs or scenarios, while invalid conditions test unexpected inputs or edge cases to ensure robustness.

- Positive vs. Negative Conditions: Positive conditions assess if the software functions correctly under normal circumstances, while negative conditions evaluate how it handles incorrect or unexpected inputs.

- Functional vs. Non-functional Conditions: Functional conditions target core functionalities, while non-functional conditions assess aspects like performance, usability, and security.

What Is a Test Process in Software Testing?

A test process in software testing is a structured approach that ensures the software meets quality standards and functions as intended. It involves several key phases, each critical for thorough evaluation: requirement analysis, test planning, test design, test environment setup, test execution, defect reporting and tracking, and test closure.

- Requirement Analysis: Understanding and analyzing testing requirements based on project documentation.

- Test Planning: Developing a detailed test plan that outlines the testing strategy, objectives, resources, schedule, and scope.

- Test Design: Creating detailed test cases and test scripts based on the requirements and planning phase.

- Test Environment Setup: Preparing the necessary hardware and software environment to execute tests.

- Test Execution: Running the test cases and documenting any defects or issues found.

- Defect Reporting and Tracking: Logging defects, prioritizing them, and tracking their resolution.

- Test Closure: Analyzing test results, documenting the testing process, and ensuring all testing objectives are met.

Following a well-defined test process ensures comprehensive testing, defect detection, and software quality improvement.

What Is a Software Testing Strategy?

A software testing strategy is a crucial document within the Software Development Life Cycle (SDLC), guiding the testing process for comprehensive evaluation of functionality, performance, and usability. Key aspects include objectives and scope, testing techniques, test levels and types, entry and exit criteria, resource allocation, risk management, and tools and technologies.

- Objectives and Scope: Defines testing objectives like defect identification and software quality enhancement. Outlines the scope of testing, specifying which functionalities and modules will undergo evaluation.

- Testing Techniques: Specifies testing methods such as black-box and white-box testing. Includes performance, usability, and security testing tailored to application needs.

- Test Levels and Types: Determines testing levels like unit, integration, system, and acceptance testing. Specifies functional, non-functional, and regression tests within each level.

- Entry and Exit Criteria: Sets clear criteria for test initiation and conclusion. Entry criteria may include completion of milestones, while exit criteria define successful testing benchmarks.

- Resource Allocation: Efficiently allocates resources like personnel, tools, and budget for testing plan execution.

- Risk Management: Identifies and addresses potential testing risks such as incomplete requirements or schedule constraints.

- Tools and Technologies: Specifies testing tools like management systems, automation frameworks, and defect trackers for efficient testing.

Establishing a comprehensive software testing strategy ensures systematic evaluation, enhancing software quality, reliability, and user satisfaction within the Software Development Life Cycle (SDLC).

What Are the Software Testing Guidelines?

Software testing guidelines encompass principles followed by software development teams to assure software quality. These guidelines advocate for varied testing techniques, meticulous documentation, continuous testing, end-user involvement, test-driven development (TDD), and utilization of testing frameworks.

- Embracing Diverse Testing Techniques: Employing a spectrum of testing methods ensures thorough software examination, identifying strengths and weaknesses across different approaches.

- Documenting the Testing Process: Comprehensive documentation, including test plans, cases, and results, aids in progress tracking, identifying improvements, and ensuring holistic software testing.

- Continuous Testing and Monitoring: Continuous testing throughout the development lifecycle detects and rectifies bugs early, enhancing software reliability and performance.

- Involving End-Users: Engaging end-users early and consistently in the testing process ensures software alignment with user needs and usability standards.

- Test-Driven Development (TDD): TDD methodology advocates writing tests before code, ensuring testability and requirement adherence in software development.

- Utilizing Testing Frameworks: Testing frameworks offer automation tools and libraries, streamlining testing processes, saving time, and elevating test quality.

What Is a Software Testing Documentation?

Software testing documentation is a critical element of the Software Development Life Cycle (SDLC). It involves creating and maintaining various documents that detail the testing process, serving multiple purposes such as providing a clear understanding of the testing strategy, facilitating communication, improving efficiency, and acting as a historical record.

- Provide Clear Understanding of the Testing Strategy: Documentation outlines the testing approach, detailing the scope, techniques, and expected outcomes.

- Facilitate Communication and Collaboration: Acts as a communication bridge among testers, developers, and stakeholders, ensuring a shared understanding of the testing process.

- Improve Efficiency and Effectiveness in Testing: Well-documented tests are easier to execute and maintain, reducing redundancy and helping testers identify and resolve issues effectively.

- Serve as a Historical Record: Provides a permanent record of testing activities, invaluable for future reference during regression testing or troubleshooting.

Types of Software Testing Documentation

- Test Plan: A high-level document outlining the overall testing strategy, defining the scope, resources, schedule, and risk assessment.

- Test Cases: Detailed specifications for individual tests, including test ID, functionality description, expected results, and test steps.

- Test Data: Data used to execute test cases, including valid, invalid, and edge cases to test application boundaries.

- Requirement Traceability Matrix (RTM): Maps software requirements to corresponding test cases, ensuring thorough testing of all requirements.

- Test Scripts: Step-by-step instructions for automating test case execution, often used with automated testing tools.

- Test Incident Reports (Bugs): Formal reports documenting issues found during testing, including bug description, reproduction steps, and severity.

- Test Execution Reports: Summarize test execution results, detailing the number of test cases passed, failed, or skipped, and relevant execution details.

- Test Summary Report: A high-level overview of the testing process and results, including test coverage, identified defects, and overall test status.

By incorporating comprehensive software testing documentation, development teams can ensure a systematic and efficient evaluation of software, enhancing quality, reliability, and user satisfaction.

What Are the Software Testing Tools?

Software testing tools ensure the reliability, completeness, and performance of software products. They handle unit and integration testing, meeting planned testing requirements. These tools are essential for evaluating software quality, managing both functional tests (verifying features) and non-functional tests (assessing performance, usability, and security). Various tools are available, each with unique advantages and disadvantages.

- Test Documentation Tools

Selecting the right test documentation tool is essential for effective software testing and quality assurance. With numerous tools available, each offering unique features and strengths, it can be challenging to determine the best fit for your needs. This list highlights the top 10 test documentation tools based on the number of users, categorizing them as open-source or paid, and identifying their key testing strengths.

Rank Tool Name Open-Source or Paid Testing Strength 1 Test Rail Paid Comprehensive test case management 2 Zephyr Paid JIRA integration, agile testing 3 TestLink Open-Source Web-based test management 4 PractiTest Paid End-to-end test management 5 qTest Paid Scalable, enterprise-level 6 Xray Paid JIRA integration, full-featured 7 TestComplete Paid Automated UI testing 8 JIRA (with plugins) Paid Versatile project and test management 9 Testpad Paid Simplicity, manual testing focus 10 SpiraTest Paid Requirements and test management - Test Automation Tools

Explore the top 10 test automation tools, ranked by user popularity. This table presents each tool's ranking, licensing model, testing strengths, and offers links to documentation or official websites. From Selenium to Katalon Studio and Appium, discover the ideal solution for your testing requirements in this curated compilation.

Rank Tool Name Open-Source or Paid Testing Strength 1 Selenium Open-Source Web Automation 2 Katalon Studio Paid Web, API, Mobile 3 Appium Open-Source Mobile Automation 4 TestComplete Paid Web, Desktop, Mobile 5 Postman Paid API Testing 6 Protractor Open-Source AngularJS Applications 7 SoapUI Paid API Testing 8 TestProject Open-Source Web, Mobile, API 9 Cucumber Open-Source BDD Testing 10 JUnit Open-Source Unit Testing - Defect Management Tools

Explore the top 10 defect management tools, ranked by user popularity. This table details each tool's ranking, licensing model, testing strengths, and provides links to documentation or official websites. From Jira to Monday.com, find the ideal solution for effective defect tracking in your projects.

Rank Tool Name Open-Source or Paid Testing Strength 1 Jira Paid Comprehensive Issue Tracking 2 Bugzilla Open-Source Robust Bug Tracking 3 Redmine Open-Source Flexible Issue Tracking 4 MantisBT Open-Source Simple Bug Tracking 5 Trello Paid Visual Task Management 6 Asana Paid Task and Issue Tracking 7 YouTrack Paid Agile Project Management 8 Backlog Paid Integrated Issue Tracking 9 Trac Open-Source Enhanced Wiki and Issue Tracking 10 Monday.com Paid Customizable Workflow Management - Performance Testing Tools

Explore the top 10 performance testing tools, ranked by user adoption. This table provides each tool's ranking, license type, testing strengths, and includes documentation links. From Apache JMeter to Artillery, identify the best solutions for effective performance and load testing in your software projects.

Rank Tool Name Open-Source or Paid Testing Strength 1 Apache JMeter Open-Source Load Testing, Stress Testing 2 LoadRunner Paid Load Testing, Performance Testing 3 Gatling Open-Source High-Performance Load Testing 4 Neoload Paid Continuous Performance Testing 5 BlazeMeter Paid Continuous Testing, Load Testing 6 WebLOAD Paid Load Testing, Performance Monitoring 7 k6 Open-Source Load Testing, Performance Testing 8 Locust Open-Source Scalable Load Testing 9 Silk Performer Paid Cloud-Based Load Testing 10 Artillery Open-Source Load Testing, Functional Testing - Security Testing Tools

Explore the top 10 security testing tools, ranked by user adoption. This table details each tool's ranking, licensing model, testing strengths, and includes links to documentation. From OWASP ZAP to OpenVAS, find the best solutions for robust security and vulnerability assessment in your software projects.

Rank Tool Name Open-Source or Paid Testing Strength 1 OWASP ZAP Open-Source Web Application Security 2 Burp Suite Paid Web Vulnerability Scanning 3 Nessus Paid Vulnerability Assessment 4 Metasploit Open-Source Penetration Testing 5 Acunetix Paid Web Application Security 6 Wireshark Open-Source Network Protocol Analysis 7 Veracode Paid Application Security Testing 8 QualysGuard Paid Vulnerability Management 9 Netsparker Paid Web Application Security 10 OpenVAS Open-Source Vulnerability Scanning

What Are the Best Practices in Software Testing?

Implementing best practices in software testing ensures high-quality software by verifying functionalities, detecting defects early, and aligning with user needs.

- Define Clear Objectives: Establish measurable testing objectives aligned with project goals to ensure focused and efficient testing.

- Early Testing Involvement: Start testing early in the development lifecycle to identify and address defects promptly, reducing costs and time.

- Comprehensive Test Planning: Develop detailed test plans outlining scope, approach, resources, and schedule for organized and thorough testing.

- Risk-Based Testing: Prioritize testing efforts based on risk and impact to ensure critical functionalities are tested thoroughly.

- Automated and Manual Testing: Implement test automation for efficiency and complement it with manual exploratory testing for a human perspective.

- Continuous Integration and Testing: Integrate testing into the continuous integration pipeline for ongoing verification and smooth feature integration.

- Test Case Management: Maintain a robust system to organize, track, and update test cases for comprehensive coverage and easy reuse.

- Performance and Security Testing: Perform regular performance and security testing to ensure the application meets standards and protects against threats.

- User Acceptance Testing (UAT): Involve end-users in UAT to validate that the software meets their needs and expectations.

- Effective Communication: Foster clear communication between developers, testers, and stakeholders to ensure alignment on quality goals.

- Detailed Defect Reporting: Report defects with detailed information for quick resolution and efficient issue management.

- Test Environment Management: Maintain a stable test environment that mirrors production to ensure accurate testing results.

By following these best practices, software development teams can ensure thorough testing, early defect detection, and the delivery of high-quality software that meets user needs and expectations.

What Is a Software Defects?

A software defect occurs when the actual outcome of a system or software application deviates from the expected result. These deviations often stem from mistakes made by developers during the development phase, leading to bugs. Defects represent inefficiencies in meeting specified requirements, hindering software performance. Detected by developers or end-users, defects undermine the expected functionality of software products in the production environment.

Why Is It Important To Detect Software Defects?

Detecting defects early in development is crucial for enhancing software quality and reliability, ensuring smoother user experiences, and bolstering customer satisfaction. Early defect detection acts as a safety net, preventing issues from escalating, and saves time and resources. Unit testing, automated testing, continuous integration, static code analysis, and peer reviews are effective methods for early detection, reducing rework and enhancing customer confidence. Software defect management is essential for delivering high-quality products and improving future projects.

What Are the Causes of Software Defects?

Software defects can significantly impact the functionality and performance of a software program. These defects can manifest in various ways, from minor glitches to complete system crashes. Understanding the root causes of software defects is crucial for developers to implement effective prevention and mitigation strategies. Some of the common causes of software defects include requirements gathering and specification issues, design flaws, coding errors, testing inefficiencies, and external factors.

- Requirements Gathering and Specification Issues

- Communication Problems: Ineffective communication between developers, stakeholders (clients, product managers), and end-users can lead to misunderstandings and misinterpretations of requirements. This can result in software that deviates from its intended purpose and introduces defects.

- Unrealistic Deadlines: Unrealistic deadlines put pressure on developers and can lead to cutting corners during the requirements gathering process. This can result in incomplete or inaccurate requirements, which can ultimately lead to defects.

- Poor Requirements Management: Lack of proper requirements documentation and version control can make it difficult to track changes and ensure everyone is on the same page. This can lead to confusion and inconsistencies, ultimately causing defects in the final product.

- Design Flaws

- Poor System Architecture: A poorly designed architecture can make the software complex, difficult to maintain, and prone to errors. Issues like tight coupling, lack of modularity, and inadequate scalability can all contribute to defects.

- Design Errors: Faulty design decisions can lead to software that is inefficient, inflexible, or difficult to test. These design flaws can manifest as defects later in the development process.

- Usability Issues: If the software's user interface (UI) design is not intuitive or user-friendly, it can lead to user errors and frustration. Usability testing throughout the development process helps identify and address these issues before they manifest as defects.

- Coding Errors

- Logic Errors: These errors stem from flaws in the program's logic, causing the software to behave incorrectly even if the syntax is perfect. Examples include incorrect conditional statements, infinite loops, or missing error handling.

- Syntax Errors: Syntax errors violate the programming language's grammar rules. These errors are typically caught by compilers or interpreters during the development process, but they can still slip through if proper coding practices are not followed.

- Bad Coding Practices: Inconsistent coding style, lack of code reviews, and inadequate commenting can all contribute to code that is difficult to understand, maintain, and debug, ultimately increasing the risk of defects.

- Testing Inefficiencies

- Incomplete Test Coverage: Testing is crucial for identifying and fixing defects before the software is deployed. However, if the test suite doesn't cover all possible scenarios and edge cases, defects might remain undetected until the software is used in a real-world setting.

- Poor Test Data Selection: Using insufficient or unrealistic test data can lead to missing critical defects during testing. Test data should be representative of real-world usage patterns to effectively identify potential issues.

- Inadequate Testing Environment: Differences between the development and deployment environments can introduce defects that go unnoticed during testing. It's important to ensure the testing environment closely mirrors the production environment to minimize the risk of defects.

- External Factors

- Third-Party Integrations: Integrating with third-party libraries or frameworks can introduce compatibility issues and unexpected behavior, leading to defects. Thorough testing of third-party integrations is essential.

- Hardware and Operating System Issues: The software's interaction with the underlying hardware and operating system can also be a source of defects. Compatibility issues, driver problems, or resource limitations can cause unexpected behavior.

- External Dependencies: Software often relies on external resources like databases, network services, or APIs. Issues with these dependencies can lead to errors and defects in the main software. Monitoring and testing external dependencies is crucial to ensure overall software stability.

By understanding these common causes of software defects, developers can implement strategies to prevent them throughout the development lifecycle. This includes employing rigorous requirements gathering techniques, following design best practices, adopting a coding style guide, implementing unit testing and integration testing, performing thorough system testing before deployment, and carefully managing external dependencies.

What Are the Different Types of Software Defects?

Software defects come in diverse forms, impacting functionality and user experience. Explore the various types to enhance your understanding and optimize your development process.

- Functional Defects: Encountered when specific software features fail to operate as intended, such as incorrect calculations or data manipulation errors. Learn how to identify and address these defects for enhanced software reliability.

- Logical Defects: Arising from errors in the software's internal logic, leading to unexpected behavior under certain conditions. Discover strategies to detect and rectify logical defects to ensure consistent software performance.

- Syntax Defects: Violations of programming language syntax rules, preventing successful compilation or interpretation of code. Uncover common syntax defects and techniques to mitigate their impact on software functionality.

- Arithmetic Defects: Resulting from errors in mathematical calculations within the software, leading to inaccurate results or unexpected behavior. Implement best practices to handle arithmetic defects and maintain software accuracy.

- Interface Defects: Issues with the software's user interface or interaction with external systems, hindering usability and causing data inconsistencies. Learn how to optimize UI design and address interface defects for an enhanced user experience.

- Multithreading Defects: Specific to software utilizing multiple threads, causing synchronization or communication issues. Explore techniques to identify and resolve multithreading defects for improved software performance.

- Performance Defects: Affecting software responsiveness, speed, or resource consumption, leading to slow performance or high resource usage. Implement strategies to optimize software performance and mitigate performance defects.

- Boundary and Range Defects: Arising from improper handling of input values outside expected boundaries or ranges, leading to crashes or incorrect calculations. Learn how to handle boundary and range defects effectively to ensure software stability.

- Data Validation Defects: Resulting from inadequate validation and sanitization of user input, allowing for the injection of malicious code. Implement robust data validation techniques to prevent data validation defects and enhance software security.

- Deployment Defects: Occurring during the software deployment process, hindering proper functioning in the production environment. Explore strategies to identify and resolve deployment defects for seamless software deployment.

- Integration Defects: Arising from failures in interactions between different software modules or systems, causing data inconsistencies or system crashes. Learn how to ensure smooth integration and address integration defects effectively.

- Documentation Defects: Hindering user understanding and complicating troubleshooting efforts due to inaccurate or incomplete documentation. Enhance documentation quality to improve user experience and facilitate software usage.

By understanding and effectively addressing these diverse types of software defects, you can optimize your development process and deliver high-quality software products.

What Are the Different Software Testing Roles?

The different software testing roles play a crucial role in achieving comprehensive testing coverage. Each role contributes a unique skill set and area of focus, working together to ensure the quality, reliability, and functionality of applications before they reach users. Some examples of these roles include Manual Tester, Test Automation Engineer, Quality Engineer, Test Lead, and Test Manager.

Manual Tester / Test Analyst / Quality Assurance Analyst (QA)

- Description: The manual tester, test analyst, or QA analyst is the foundation of the testing team. They are responsible for designing and executing test cases to identify defects in the software through manual testing methods.

- Responsibilities:

- Analyze software requirements and design documents to understand the functionalities of the software.

- Develop and execute test cases covering various functionalities, user scenarios, and edge cases.

- Report defects identified during testing, including detailed descriptions, severity levels, and expected behavior.

- Participate in test reviews and discussions to ensure comprehensive test coverage.

- Stay up-to-date on testing methodologies and best practices.

Test Automation Engineer / Software Development Engineer in Test (SDET)

- Description: The test automation engineer, also known as a Software Development Engineer in Test (SDET), focuses on automating repetitive test cases using scripting languages and testing frameworks.

- Responsibilities:

- Develop and maintain automated test scripts to improve test efficiency and coverage.

- Select and utilize appropriate testing tools and frameworks.

- Integrate automated tests into the continuous integration/continuous delivery (CI/CD) pipeline.

- Troubleshoot automation scripts and identify root causes of test failures.

- Collaborate with developers to ensure testability of the software.

Quality Engineer (QE)

- Description: The quality engineer (QE) combines the skillsets of both the manual tester and the test automation engineer. They are well-versed in both manual and automated testing techniques.

- Responsibilities:

- Perform manual and exploratory testing to identify defects.

- Develop and maintain automated test scripts.

- Participate in code reviews to identify potential issues from a testing perspective.

- Analyze test results and identify trends to improve software quality.

- Conduct functional and non-functional tests such as Performance Testing, Security Testing, Usability Testing, etc.

Test Lead

- Description: The test lead provides direction and guidance to the testing team, ensuring all testing activities are aligned with project goals and deadlines.

- Responsibilities:

- Develop and manage the overall testing strategy for the project.

- Create and assign test plans and test cases to team members.

- Facilitate test execution and track progress.

- Report testing status and identify risks to stakeholders.

- Lead discussions and resolve testing-related issues.

Test Manager

- Description: The test manager oversees all aspects of the testing process, providing leadership and strategic direction to the testing team.

- Responsibilities:

- Develop and manage the testing budget and resources.

- Define testing methodologies and best practices for the team.

- Recruit, train, and mentor testing personnel.

- Manage communication between the testing team, developers, and other stakeholders.

- Analyze test results and metrics to identify areas for improvement.

- Implement and maintain quality assurance processes.

By working together, these software testing roles play a vital role in ensuring the quality and reliability of software applications.

How To Become a Software Tester?

Becoming a software tester involves several key steps, including obtaining the right educational qualifications, developing essential skills, gaining practical experience, and earning relevant certifications.

Educational Qualification

While a formal education is not always mandatory for entry-level software testing roles, it can provide a strong foundation in software development principles, testing methodologies, and industry best practices. Here are some educational options to consider:

- Bachelor's Degree in Computer Science or Information Technology: A bachelor’s degree provides essential knowledge in software development, programming languages, databases, and design principles, crucial for understanding complex software functionalities and creating effective test cases.

- Associate's Degree in Software Testing or a Related Field: Associate's degrees in software testing cover test case design, automation, defect management, and methodologies like Agile or Waterfall, providing focused, industry-specific education.

- Bootcamps or Online Courses: Bootcamps and online courses offer intensive, fast-track training for entering the software testing field, providing essential skills in a shorter time than traditional degrees, with a focused learning approach.

- Non-IT related courses: Non-IT graduates can enter IT through software testing training, gaining essential testing skills and basic software development knowledge to understand task timing and workflows.

Building Your Skill Set

Regardless of your educational path, building a robust skill set is crucial for software testing success.

- Technical Skills

- Understanding of Software Development Lifecycle (SDLC): Familiarity with the different phases of software development, from requirements gathering to deployment, is essential for effective testing.

- Software Testing Methodologies: Explore testing methodologies such as black-box, white-box, exploratory, and usability testing. Understanding these approaches broadens defect detection capabilities and enhances testing effectiveness.

- Defect Management Tools: Proficiency in bug tracking tools like Jira or Bugzilla is essential for logging, tracking, and reporting software defects during testing, streamlining the defect management process.

- Soft Skills

- Analytical Thinking and Problem-Solving: Strong analytical skills are vital for software testers to identify defect root causes and propose effective solutions, enhancing testing efficiency and software quality.

- Attention to Detail: Attention to detail is vital in spotting discrepancies and ensuring thorough testing coverage, essential for maintaining software quality and reliability.

- Communication Skills: Clear communication with developers, project managers, and stakeholders is essential for sharing test results, collaborating on solutions, and aligning on project objectives, ensuring effective software development processes.

- Technical Skills

Certifications (Optional)

While not mandatory, industry-recognized certifications demonstrate commitment and enhance resumes. Popular software testing certifications include:

- Certified Software Tester (ISTQB) Foundation Level: This entry-level certification provides a broad foundation in software testing concepts and best practices.

- Certified Software Test Professional (CSTP): The CSTP certification caters to individuals with experience in software testing and assesses their knowledge of advanced testing methodologies and tools.

- Agile Certified Tester (ACT): This certification is ideal for testers who specialize in Agile development methodologies and want to validate their understanding of testing within an Agile framework.

Building your Portfolio and Network

- Develop a Portfolio: Create a portfolio showcasing your software testing skills and experience, including personal projects, volunteer work, or internships. Highlight your testing approach, tools used, and successful project outcomes.

- Network with Industry Professionals: Connect with fellow software testers and industry professionals through online forums, conferences, or meetups. Engage in discussions, offer insights, and seek informational interviews to learn and discover job opportunities.

Level Up To Keep Your Skills Up to Date!

Learn from the Quality Engineering experts! Get started with QE 360's Training today!

What Are the Best Courses for Software Testing?

Software testing courses offer a valuable springboard for individuals seeking to launch a career in software testing or aspiring to enhance their existing skill set. These courses can be categorized by learning approach, with some of the prominent platforms offering them including Udemy, Coursera, edX, and Pluralsight.

Foundation Courses

These courses provide a comprehensive introduction to software testing fundamentals, equipping you with the foundational knowledge necessary to excel in this field. Topics typically covered include:

- Software Development Lifecycle (SDLC): Gain a thorough understanding of the different phases of software development, from requirements gathering to deployment, to effectively plan and execute testing strategies. (Platforms: Udemy, Coursera, edX)

- Testing Methodologies: Learn about various testing methodologies like black-box testing (focusing on external functionality), white-box testing (leveraging internal code structure), exploratory testing (unstructured, creative test case design), and usability testing (evaluating user experience), allowing you to approach testing from diverse perspectives and uncover a wider range of potential defects. (Platforms: Udemy, Coursera, edX)

- Defect Management Tools: Get familiar with industry-standard bug tracking tools like Jira or Bugzilla, enabling you to efficiently log, track, and report software defects throughout the testing process. (Platforms: Many platforms offer courses that cover bug tracking tools as part of the curriculum, but specific tools might vary.)

Courses (Platforms: Varied based on specialization)

- Test Automation Courses: Equip testers with the skills to automate repetitive test cases, improving testing efficiency and coverage. These courses typically delve into popular automation frameworks and tools like:

- Selenium: An open-source framework for automating web application testing across various browsers. (Platforms: Udemy, Coursera, Pluralsight)

- Cypress: A modern JavaScript end-to-end testing framework for web applications. (Platforms: Udemy, Pluralsight)

- Robotic Process Automation (RPA) Platforms: Learn how to leverage RPA tools to automate repetitive tasks within the testing process, such as data entry or test environment preparation. (Platforms: UiPath Academy, Automation Anywhere University)

- Performance Testing Courses: Teach methodologies to assess a software application's responsiveness, scalability, and stability under load. These courses often cover industry-standard performance testing tools like:

- LoadRunner: A popular tool for simulating high user loads and analyzing application performance under stress. (Platforms: Micro Focus Learning Center)

- JMeter: An open-source tool for load testing, performance measurement, and functional testing. (JMeter University offers resources and tutorials)

- Gatling: Another open-source tool for load and performance testing, often favored for its ease of use and scalability. (Gatling offers free online documentation and tutorials)

- Security Testing Courses: Provide the knowledge and skills to identify and exploit vulnerabilities in software applications, enhancing the overall security posture. These courses often explore topics like:

- Security Testing Principles: Learn fundamental concepts of secure coding practices, common vulnerabilities, and attack vectors. (Platforms: Udemy, Cybrary)

- Penetration Testing Methodologies: Gain insights into ethical hacking techniques used to identify and exploit vulnerabilities in software applications. (SANS Institute, Offensive Security)

- Security Testing Tools: Explore industry-standard tools like Burp Suite (an integrated platform for various security testing activities) or OWASP ZAP (an open-source web application security scanner). (PortSwigger Web Security Academy offers courses for Burp Suite, OWASP ZAP has its own documentation and tutorials)

- Test Automation Courses: Equip testers with the skills to automate repetitive test cases, improving testing efficiency and coverage. These courses typically delve into popular automation frameworks and tools like:

Certification Preparation Courses (Platforms: Varied based on certification)

Designed to help you prepare for industry-recognized certifications that validate your software testing knowledge and skills, potentially increasing your job prospects. Here are some popular certifications and corresponding preparation courses:

- Certified Software Tester (ISTQB) Foundation Level: This entry-level certification validates a tester's grasp of core software testing concepts and best practices. Courses typically focus on the ISTQB syllabus, covering topics like test planning, test design techniques, test execution, and defect management. (Platforms: Many platforms offer ISTQB Foundation Level prep courses, including Udemy, Coursera, and ISTQB accredited training providers)

- Certified Software Test Professional (CSTP): The CSTP certification caters to individuals with experience in software testing and assesses their knowledge of advanced testing methodologies and tools. Preparation courses delve deeper into areas like test automation, performance testing, and security testing. (Platforms: Limited availability compared to ISTQB, some options include International Software Testing Qualifications Board (ISTQB) accredited training providers)

- Agile Certified Tester (ACT): This certification is ideal for testers who specialize in Agile development methodologies and want to validate their understanding of testing within an Agile framework. Courses typically cover Agile principles, testing practices within Agile projects, and Agile testing tools. (Platforms: Agile Testing Alliance offers resources and sometimes instructor-led courses, some platforms like Udemy or Coursera might also offer ACT prep courses)